Gemini Moves One-Step Closer to Curing Cancer

Edition #204 | 20 October 2025

How about a product that cleans you from the web like you’d clean a dataset? That means less spam, lower exposure to identity threat and fraud, and total peace of mind.

Your personal data powers someone else’s dashboard - that of data brokers, scammers and spammers. Cloaked finds where your info lives, files removal requests, and verifies deletion. Plus you get unlimited email and phone aliases so you never need to hand out your personal info ever again.

Google’s Gemma Model Generated 50% Increase in Antigen Presentation with Dual-Context Virtual Screening

In this edition, we will also be covering:

Meta-Blue Owl Hyperion deal

Anthropic cheaper model and US expansion

Google AI hub in India

Today’s Quick Wins

What happened: Google released C2S-Scale 27B, a 27B-parameter model in the Gemma family that used a dual-context virtual screen to simulate over 4,000 drugs and identified silmitasertib combined with low-dose interferon to drive a 50% increase in antigen presentation in immune-context-positive cells.

Why it matters: This is the first experimentally validated AI-driven discovery of a conditional immunotherapy amplifier, potentially accelerating development of combination cancer therapies.

The takeaway: Integrate large-scale foundation models into virtual screening pipelines to uncover novel, context-specific therapeutic hypotheses.

Deep Dive

AI-Powered Dual-Context Virtual Screening Uncovers Silmitasertib Synergy

Scaling laws in biology mirror those in language: larger models unlock emergent capabilities beyond improved accuracy. Google’s C2S-Scale 27B demonstrates this by generating a novel hypothesis for cancer immunotherapy.

The Problem: Many tumors remain “cold,” evading immune detection due to inadequate antigen presentation despite low interferon signaling.

The Solution: C2S-Scale 27B was tasked with finding a conditional amplifier, a drug that boosts antigen presentation only in a specific immune context.

Immune-Context-Positive: Patient-derived tumor-immune samples with low interferon.

Immune-Context-Neutral: Isolated cell lines without immune signals.

The model ran virtual screens across both contexts and filtered for drugs that showed synergy only in the positive environment.

JetStream-Style Batching: Emulated high-throughput screening by batching 4,000 drug-context simulations.

Context-Split Scoring: Assigned conditional scores to highlight hits unique to immune-positive samples.

Emergent Reasoning: Leveraged scale-induced capabilities to resolve complex conditional dependencies.

The Results Speak for Themselves:

Baseline: Silmitasertib alone or interferon alone produced negligible or modest antigen presentation.

After Optimization: Combined treatment yielded a 50% increase in MHC-I expression.

Business Impact: Provides a validated lead for combination immunotherapy pipelines, potentially reducing time-to-clinic by months.

What We’re Testing This Week

Optimizing Transformer Inference for Production Environments

As language models become central to business applications, the gap between research benchmarks and production performance becomes critical. We’ve been stress-testing inference optimization techniques that reduce latency without sacrificing accuracy, focusing on methods that work with existing model architectures rather than requiring expensive retraining.

Dynamic Quantization with Calibration We compared INT8 quantization approaches across three popular frameworks. PyTorch’s dynamic quantization reduced model size by 68% while maintaining within 2% of baseline accuracy on classification tasks, but ONNX Runtime with calibration delivered 3.2x faster inference on CPU-bound deployments with only 1.3% accuracy degradation. The key insight is that calibration data selection matters enormously—using representative production data rather than random validation samples improved post-quantization accuracy by 15-20 percentage points.

Speculative Decoding for Autoregressive Models This technique uses a smaller draft model to predict multiple tokens ahead, then validates them in parallel with the full model. On a 7B parameter model, we achieved 2.4x throughput improvement for long-form text generation with zero accuracy loss. The sweet spot is pairing models within the same family where the small model is 10-15x smaller than the target model.

💵 50% Off All Live Bootcamps and Courses

📬 Daily Business Briefings; All edition themes are different from the other.

📘 1 Free E-book Every Week

🎓 FREE Access to All Webinars & Masterclasses

📊 Exclusive Premium Content

Recommended Tools

This Week’s Game-Changers

LangSmith Production Monitoring

End-to-end observability for LLM applications with automatic prompt versioning and A/B testing. Tracks token usage, latency, and output quality across 500+ model deployments. Check it out

Weights & Biases Weave

Purpose-built evaluation framework for generative AI that automates regression testing across model updates. Catches quality degradation before deployment with customizable scoring functions. Check it out

Modal Serverless GPU

Deploy ML inference endpoints with automatic scaling from zero to thousands of requests per second. Pay only for compute time used with sub-second cold starts. Check it out

Quick Poll

Lightning Round

3 Things to Know Before Signing Off

Meta, Blue Owl Seal $30 Billion Private Capital Deal for AI

Meta is near finalizing a roughly $30 billion package to fund its Hyperion data center in Louisiana, with Blue Owl co-owning a large stake while Meta keeps about 20%; Morgan Stanley arranged the debt and equity through an SPV to support the projectUS start-up Anthropic unveils cheaper model to widen AI’s appeal

Anthropic unveiled a more affordable AI model to broaden appeal and reduce barriers to adoption amid rising demand for cost-efficient, scalable AI services in the US market and beyondGoogle to build $15bln AI + data centre hub in Visakhapatnam, India

Google plans a $15 billion AI data-center hub in Visakhapatnam, India, signaling a major investment to expand AI infrastructure and regional capacity for cloud and machine-learning workloads

Follow Us:

LinkedIn | X (formerly Twitter) | Facebook | Instagram

Please like this edition and put up your thoughts in the comments.

EXCLUSIVE LIMITED-TIME OFFER: 50% OFF Newsletter Sponsorships!

Get 50% off on all the prices mentioned below

Actual Sponsorship Prices

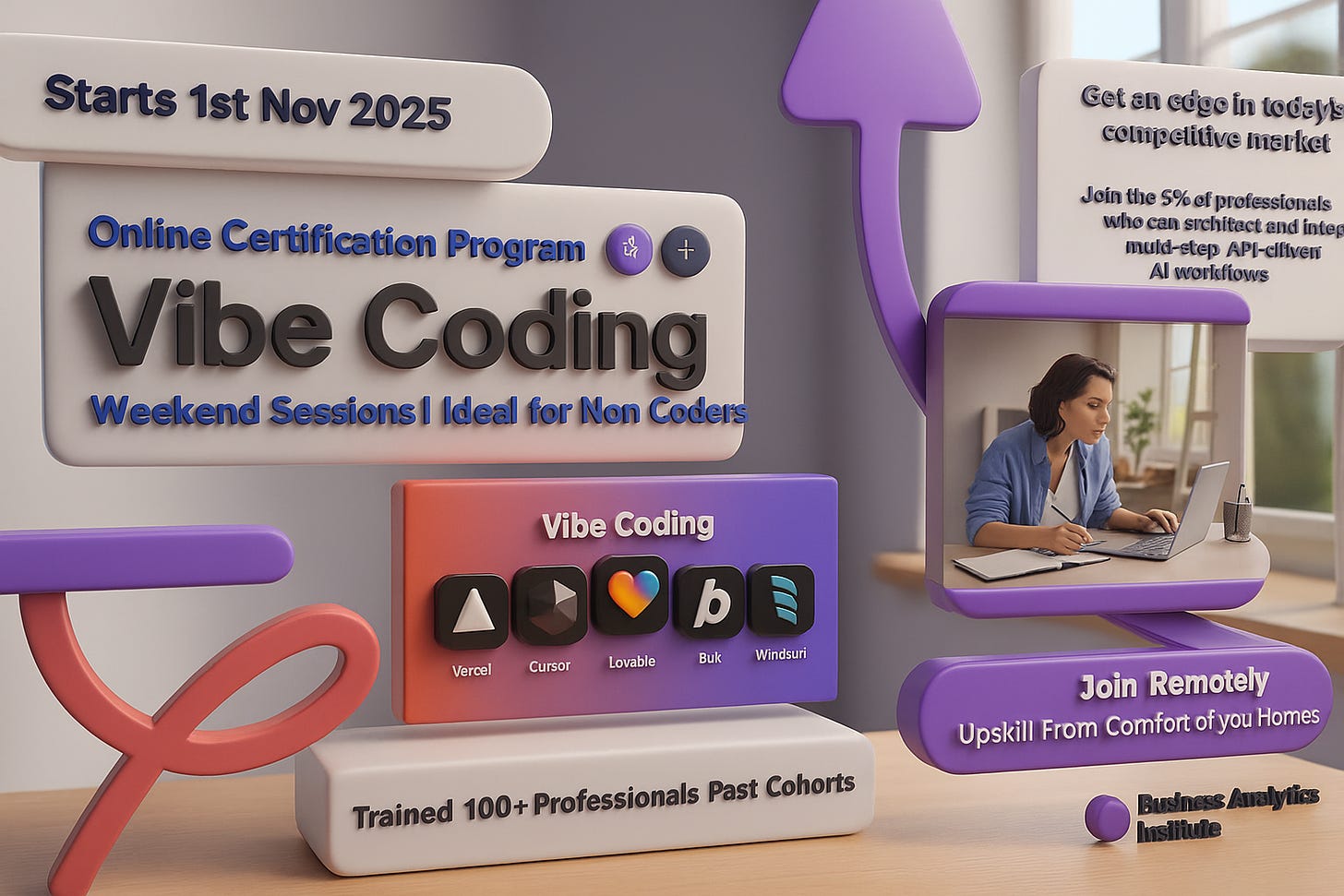

Vibe Coding Certification - Live Online

Weekends Sessions | Ideal for Non Coders | Learn to code using AI