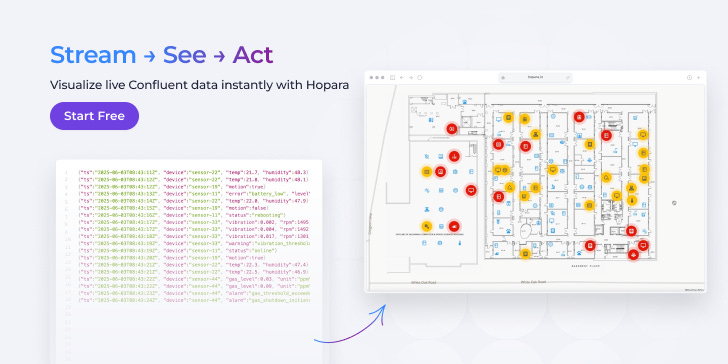

Real-time Streaming Visualization for Confuent

Hopara connects directly to Confluent to give teams a fast, interactive view of real-time data - right out of the stream. Perfect for data teams who need clarity, fast.

See how it works in this 2-minute demo and start your 15-day free trial with guided onboarding included.

Hello!

Welcome to today's edition of Business Analytics Review!

We're diving into the fascinating world of Hyperparameters in machine learning, a topic that’s critical for anyone looking to harness the power of AI in business analytics. Hyperparameters are the knobs and dials you set before training a model, guiding how it learns from data. Unlike parameters, which the model figures out during training (like the weights in a neural network), hyperparameters are your chance to shape the learning process. Get them right, and your model can deliver stunning accuracy; get them wrong, and it might flounder.

In this edition, we’ll explore three key hyperparameters—learning rate, max depth, and regularization strength—that play pivotal roles in popular algorithms like neural networks, decision trees, and linear models. We’ll also share resources for further learning and introduce a trending AI tool to simplify hyperparameter tuning. Let’s get started!

Hyperparameters and Its Importance

Imagine you’re baking a cake. The ingredients (data) and the recipe (algorithm) are crucial, but so are the oven settings—temperature and baking time. Hyperparameters are like those settings in machine learning. They control aspects like model complexity, learning speed, and generalization to new data. In business analytics, where models predict customer behavior or optimize supply chains, tuning hyperparameters can mean the difference between a model that drives profits and one that misses the mark.

For example, a retail company using a machine learning model to forecast sales might struggle with inaccurate predictions if the hyperparameters aren’t tuned properly. By adjusting these settings, data scientists can ensure the model captures patterns without overfitting to historical data. Let’s break down three key hyperparameters you’ll encounter in popular algorithms.

Key Hyperparameters in Popular Algorithms

Learning Rate

The learning rate is a hyperparameter used in algorithms that rely on gradient descent, such as neural networks or gradient boosting models like XGBoost. It determines the size of the steps the model takes to minimize errors during training. A high learning rate might speed things up but risks overshooting the optimal solution, like a cyclist pedaling too fast down a hill. A low learning rate ensures smaller, cautious steps but can make training painfully slow.

For instance, in a neural network predicting customer churn for a telecom company, a well-tuned learning rate ensures the model learns patterns efficiently without erratic updates. Typical values range from 0.001 to 0.1, but finding the sweet spot often requires experimentation.

Max Depth

Max depth is a critical hyperparameter in tree-based models like decision trees and random forests, which are popular in business analytics for their interpretability. It limits how deep the tree can grow, controlling its complexity. A shallow tree (low max depth) might miss intricate patterns, leading to underfitting, while a deep tree risks overfitting, memorizing the training data instead of generalizing.

Consider a decision tree used to segment customers for a marketing campaign. Setting max depth to 3 might keep the model simple but miss nuanced behaviors, while a depth of 20 could overfit, tailoring too closely to past data. A balanced max depth, often between 3 and 10, helps the model generalize to new customers.

Regularization Strength

Regularization strength, often denoted as C in support vector machines or alpha in Ridge/Lasso regression, prevents overfitting by penalizing overly complex models. It’s like adding guardrails to keep a model from veering too far into the training data’s quirks. In linear models, higher regularization strength shrinks coefficients, simplifying the model, while lower values allow more flexibility.

In a financial forecasting model, strong regularization might ensure the model doesn’t overreact to market noise, improving predictions for future trends. For example, in Lasso regression, an alpha of 1.0 might eliminate irrelevant features, making the model more interpretable for business decisions.

A Real-World Analogy

To make this relatable, think of teaching a child to ride a bike. The learning rate is how much you adjust their balance with each wobble—too much, and they fall; too little, and they don’t progress. Max depth is like the number of instructions you give at once—too many, and they’re overwhelmed; too few, and they don’t learn enough. Regularization strength is like deciding how much to rely on training wheels—too much dependence, and they won’t ride independently; too little, and they might crash. Tuning these hyperparameters is about finding the right balance for success.

Industry Insights

In the business world, hyperparameter tuning is often automated to save time and boost efficiency. Companies like Amazon and Google use tools like AWS SageMaker or Google Vertex AI to automate hyperparameter optimization, allowing data scientists to focus on strategy rather than manual tweaking. For instance, a logistics company might use tuned decision trees to optimize delivery routes, where max depth ensures the model balances detail and generalization, directly impacting fuel costs and delivery times.

Recent discussions on X highlight the growing importance of advanced tuning methods. For example, a post by @HideyoOosawa on June 5, 2025, praised μ-Param and μTransfer for transferring hyperparameters from small to large neural networks, saving computational resources (X post). Another post by @TDataScience on June 9, 2025, compared Bayesian Optimization to Grid Search, showcasing real-world applications with KerasTuner (X post). These insights underscore the industry’s shift toward smarter, automated tuning techniques.

Recommended Reads

Hyperparameters in Machine Learning

A comprehensive guide to key hyperparameters in tree-based models and other popular algorithms, with practical code examples using scikit-learn.Hyperparameters in Machine Learning

An accessible introduction using analogies like cooking recipes to explain hyperparameters across algorithms like decision trees and SVMs.Hyperparameter Tuning

A detailed overview of tuning techniques like GridSearchCV, RandomizedSearchCV, and Bayesian Optimization, with examples for logistic regression and decision trees.

Trending in AI and Data Science

Let’s catch up on some of the latest happenings in the world of AI and Data Science

Anthropic’s AI Writes Its Own Blog

Anthropic has launched an AI-driven blog, where its AI generates articles under human supervision, blending automation with editorial oversight to share company updates and insights.Kuwait Investment Authority Backs AI Infrastructure

The Kuwait Investment Authority has joined a major AI infrastructure partnership, aiming to boost regional technology capabilities and support the development of advanced digital solutions in the Middle East.Beijing Academy Launches RoboBrain AI Model

The Beijing Academy has unveiled RoboBrain, an open-source AI model designed for humanoid robots, marking a significant step in China’s efforts to advance robotics and artificial intelligence research.

Trending AI Tool: Ray Tune

Ray Tune is a powerful hyperparameter tuning library for machine learning, built on Ray. It supports various search algorithms like random, grid, Bayesian optimization, Optuna, and ASHA. Users define parameter spaces using tune.choice(), tune.uniform(), and similar methods. It integrates seamlessly with popular ML frameworks and enables distributed, efficient, and scalable hyperparameter optimization with early stopping.

Learn more

Learners who enroll TODAY, would get an e-books worth $500 FREE

For any questions, mail us at vipul@businessanalyticsinstitute.com