Hello!!

Welcome to the new edition of Business Analytics Review!

In today’s edition, we are going to discuss Recurrent Neural Networks (CNNs) which are deep learning models specifically designed to process sequential data. They are particularly effective in tasks where the order of inputs is crucial, such as in natural language processing (NLP), speech recognition, and time series prediction.

They maintain an internal memory through feedback loops, allowing them to capture temporal dependencies and "remember" past inputs to influence future outputs. This has led to the development of alternatives like LSTMs and GRUs.

Key Features of RNNs

Sequential Data Processing: RNNs handle data sequences where each element depends on previous ones. This is different from feedforward networks, which process inputs independently

Internal Memory: RNNs maintain an internal memory, known as the hidden state, which allows them to "remember" past inputs and use this information to influence future outputs

Recurrent Connections: The output from one time step is fed back into the network at the next time step, enabling the capture of temporal dependencies

Shared Parameters: Unlike feedforward networks, RNNs share the same parameters across different time steps, which simplifies training but requires specialized algorithms like backpropagation through time (BPTT)

Types of RNNs

Unidirectional RNNs: Process sequences in one direction, from past to present

Bidirectional RNNs (BRNNs): Process sequences in both forward and backward directions, allowing for better understanding of context

Long Short-Term Memory (LSTM) Networks: An extension of RNNs designed to handle long-term dependencies more effectively by mitigating the vanishing gradient problem

Gated Recurrent Units (GRUs): Similar to LSTMs but more computationally efficient

See Why the Fastest Growing B2B Brands Trust Spacebar Studios

Spacebar Studios is behind some of the fastest growing B2B companies in tech, routinely taking companies from $2M-$25M+ ARR. If you're not consistently at 5x+ pipeline coverage each quarter, you may want to see what they're all about...

Recommended Video

The video explains Recurrent Neural Networks (RNNs) as neural networks designed to handle sequential data. Unlike regular neural networks, RNNs have loops, enabling them to use information from the past by maintaining a hidden state. The hidden state is updated at each time step, allowing the network to learn and remember previous inputs. Key challenges include vanishing/exploding gradients and complexity in training, which are addressed by architectures like LSTMs and GRUs.

Trending in AI and Data Science

Let’s catch up on some of the latest happenings in the world of AI and Data Science:

GM’s path to the future gets an AI infusion from NVIDIA

General Motors partners with Nvidia to integrate AI chips for autonomous vehicles, advanced driver assistance, and factory optimization systems

DSTA Selects Oracle Cloud Infrastructure for Ministry of Defence Singapore

Oracle partners with Singapore’s defence agency to provide air-gapped cloud and AI services, enhancing military operations and cybersecurity

Adobe rolls out AI agents for online marketing tools

Adobe introduces AI agents for personalized customer experiences, marketing automation, and workflow optimization, including the new Agent Orchestrator platform

Tool of the Day: Vitis AI

Vitis AI, primarily designed for CNNs, can be adapted for Recurrent Neural Networks (RNNs) through custom configurations and optimizations. It supports RNN deployment via the Vitis AI Runtime (VART) APIs, requiring developer customization. While not the primary focus, Vitis AI can accelerate RNN tasks on AMD platforms, offering quantization and deployment capabilities for optimized performance.

Learn More

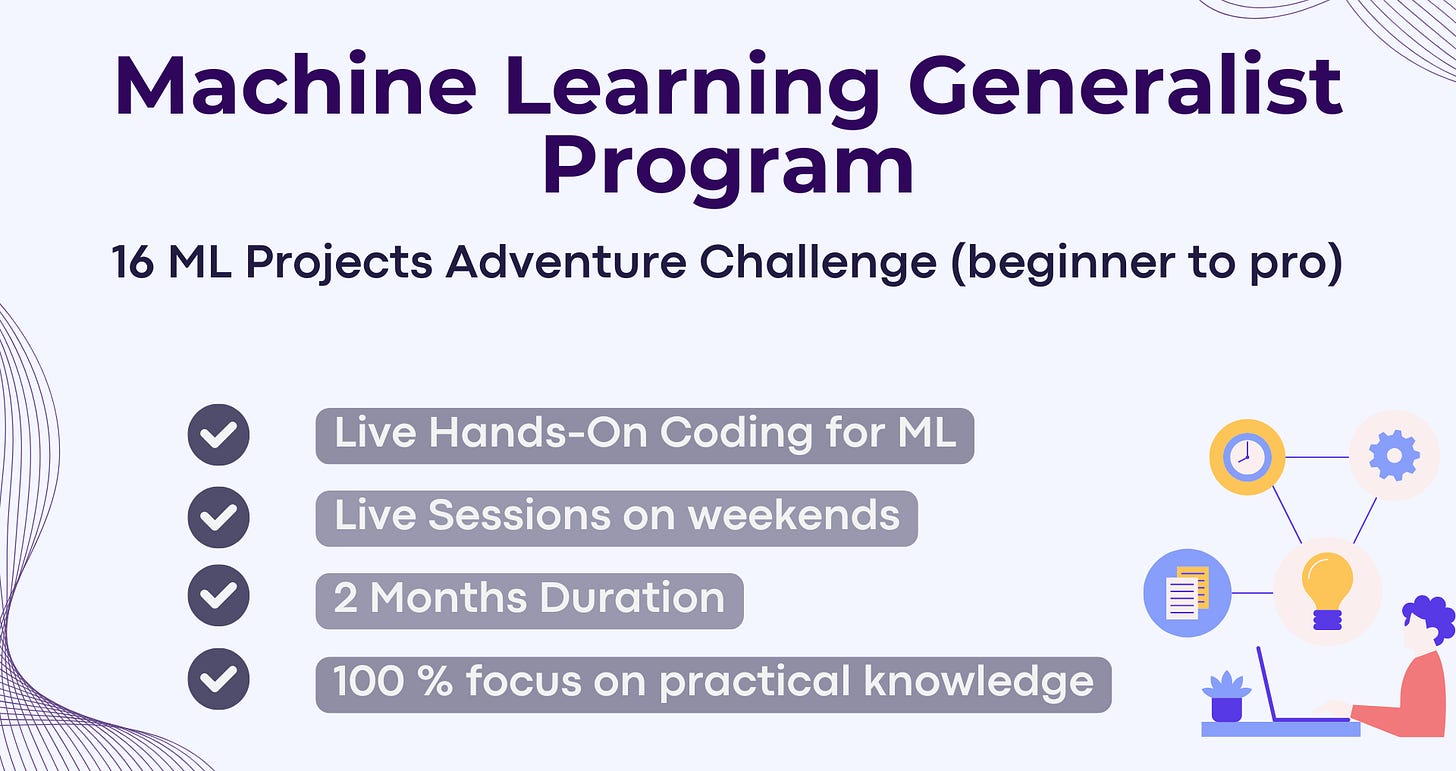

Learn how to develop a Chatbot for Customer Support using LangChain in one of the projects; Create an AI-Powered Legal Document Search & Much More…

To apply for scholarship, reach us at - vipul@businessanalyticsinstitute.com

This breakdown of RNNs is incredibly insightful! The way you explained the key features, especially how RNNs process sequential data and maintain internal memory, really helps clarify why they're so powerful for NLP and time-series tasks. I also appreciate the introduction to LSTMs and GRUs as solutions to the vanishing gradient problem. It’s fascinating to see how these models have evolved. Looking forward to more deep dives into neural networks and their applications!