Ensemble Model Diversity

Edition #163 | 16 July 2025

Hello!

Welcome to today's edition of Business Analytics Review!

Today, we're diving into the fascinating world of ensemble learning, with a special focus on the critical role of diversity in ensemble models. If you've ever wondered why combining multiple models can lead to better predictions or how techniques like bootstrap aggregation and model randomization make this possible, you're in for an insightful read.

What is Ensemble Learning?

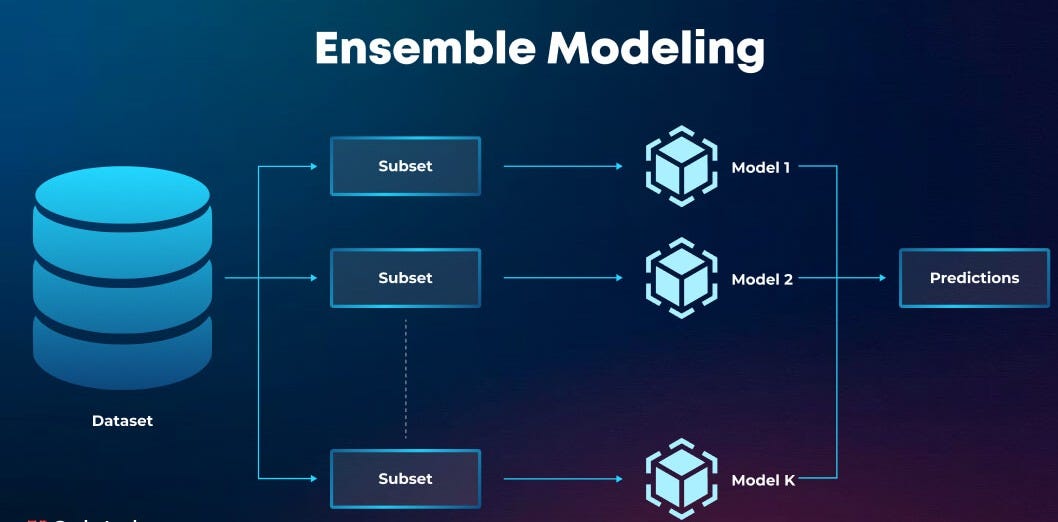

Ensemble learning is a cornerstone of modern machine learning, where multiple models—often called base learners or weak learners—are combined to produce a single, more accurate prediction. The idea is simple yet powerful: just as a group of people with varied expertise can make better decisions than a single individual, combining models can yield superior results compared to relying on one model alone. By aggregating predictions through methods like averaging (for regression) or majority voting (for classification), ensembles reduce errors and improve robustness.

This approach has become a staple in AI and machine learning, powering applications from customer churn prediction to fraud detection. Its success, however, hinges on one key factor: diversity among the models.

Why Diversity Matters in Ensembles

Diversity in ensemble learning refers to the differences in predictions or errors made by individual models. For an ensemble to outperform its individual components, each model should ideally make different mistakes. When models err differently, their errors can cancel out when combined, leading to a more accurate and reliable prediction.

Imagine you're trying to predict the weather. If you consult one meteorologist, you might get a decent forecast. But if you ask several meteorologists, each using different models or data sources, and average their predictions, you're likely to get a more accurate result. Similarly, in ensemble learning, diversity ensures that the combined model captures a broader range of patterns in the data.

Research suggests that diversity is as important as individual model accuracy. If all models in an ensemble make similar predictions, combining them adds little value. Studies, such as those referenced in Ensemble Methods (2012), indicate that ensembles with diverse, uncorrelated predictions often outperform those with highly correlated models, even if the latter are individually more accurate.

Techniques to Achieve Diversity

Creating diversity in ensemble models is both an art and a science. Here are two widely used techniques, as requested, along with a broader context:

Bootstrap Aggregation (Bagging)

Bootstrap aggregation, or bagging, is a technique that introduces diversity by training each model on a different subset of the training data. These subsets are created through random sampling with replacement, meaning some data points may appear multiple times in a subset, while others may be omitted. Each model, therefore, sees a slightly different version of the data, leading to varied predictions.

How it works: From the original dataset, multiple subsets are generated. For example, if you have a dataset of 1,000 customer records, bagging might create 10 subsets of 1,000 records each, where some records are repeated. Each subset trains a separate model (e.g., a decision tree), and the final prediction is obtained by averaging (for regression) or majority voting (for classification).

Example: Bagging is the foundation of random forests, where decision trees are trained on bootstrapped data subsets to ensure diversity.

Model Randomization

Model randomization introduces diversity by adding randomness to the model-building process. This can involve using different algorithms, varying hyperparameters, or incorporating randomness in the training procedure. A classic example is in random forests, where, in addition to bagging, each decision tree considers only a random subset of features at each split, ensuring that trees make different decisions.

How it works: By altering aspects of the model, such as initial weights in neural networks or feature selection in decision trees, randomization ensures that models capture different aspects of the data. For instance, in random forests, at each node of a decision tree, only a random subset of features (e.g., 3 out of 10 features) is considered for splitting, making Lillahhancing diversity.

Example: Random forests combine bagging with feature randomization, making them highly effective for tasks like image classification or financial forecasting.

💵 50% Off All Live Bootcamps and Courses

📬 Daily Business Briefings; All edition themes are different from the other.

📘 1 Free E-book Every Week

🎓 FREE Access to All Webinars & Masterclasses

📊 Exclusive Premium Content

Other Techniques

Beyond bagging and randomization, diversity can be achieved by:

Training on different feature sets: Using different combinations or transformations of features to capture varied data perspectives.

Using different algorithms: Combining models like decision trees, logistic regression, or neural networks to leverage their unique strengths.

Output representation manipulation: Modifying target values or loss functions to encourage different prediction patterns.

These methods, as noted in Ensemble Machine Learning (2012), help ensure that ensemble models are independent or negatively correlated, maximizing their collective strength.

Real-World Applications

The power of diverse ensembles is evident in real-world applications. Random forests, which combine bagging and feature randomization, are a standout example. They excel in tasks like predicting customer churn, detecting fraudulent transactions, or classifying medical diagnoses due to their ability to handle complex, noisy data and avoid overfitting. For instance, in the famous Netflix Prize competition, ensemble methods, including diverse model combinations, played a key role in the winning solutions, demonstrating their effectiveness in tackling challenging prediction problems.

Challenges and Considerations

While diversity is crucial, measuring it remains a challenge. There’s no universally agreed-upon metric for diversity, though concepts like correlation between model predictions or error variance are often used as proxies. Additionally, there’s a trade-off: too much diversity can lead to less accurate individual models, while too little can result in redundant predictions. Striking the right balance is key, and techniques like bagging and randomization help achieve this balance effectively.

Recommended Reads

A Gentle Introduction to Ensemble Diversity for Machine Learning

A clear and concise introduction to why diversity is crucial for improving ensemble performance.Understanding the Importance of Diversity in Ensemble Learning

Explores how diversity leads to better predictions and discusses various methods to achieve it.Comprehensive Guide for Ensemble Models

A detailed guide on ensemble techniques, including how diversity boosts accuracy, with Python code examples.

Flagship programs offer by Business Analytics Institute for upskilling

AI Agents Certification Program | Batch Size - 7 |

Teaches building autonomous AI agents that plan, reason, and interact with the web. It includes live sessions, hands-on projects, expert guidance, and certification upon completion. Join Elite Super 7s HereAI Generalist Live Bootcamp | Batch Size - 7 |

Master AI from the ground up with 16 live, hands-on projects, become a certified Artificial Intelligence Generalist ready to tackle real-world challenges across industries. Join Elite Super 7s HerePython Live Bootcamp | Batch Size - 7 |

A hands-on, instructor-led program designed for beginners to learn Python fundamentals, data analysis, and visualization including real-world projects, and expert guidance to build essential programming and analytics skills. Join Elite Super 7s Here

Get 20% discount Today on all the live bootcamps. Just send a request at vipul@businessanalyticsinstitute.com

Trending in AI and Data Science

Let’s catch up on some of the latest happenings in the world of AI and Data Science

California Pushes for AI Transparency

California State Senator Scott Wiener is reigniting efforts with a new bill requiring top AI companies to publicly disclose safety protocols and incident reports, aiming for industry transparency and accountability.OpenAI Plans Launch of AI Web Browser

OpenAI will soon release an AI-powered web browser designed to challenge Google Chrome, integrating AI features like Operator for smarter, conversational web browsing and deeper ChatGPT integration.Perplexity Debuts Comet AI Browser

Perplexity has launched Comet, an AI-driven web browser. Initially available to premium users, Comet offers built-in AI search, summaries, and agent-powered automation, aiming to reshape how information is found online.

Trending AI Tool: ML-Ensemble (Python Library)

ML Ensemble combines predictions from multiple models to improve accuracy and robustness. By leveraging the strengths of diverse algorithms, ensemble methods like bagging, boosting, and stacking reduce overfitting and enhance generalization. Diversity among models is key, ensuring that errors are minimized through collective decision-making.

Follow Us:

LinkedIn | X (formerly Twitter) | Facebook | Instagram

Please like this edition and put up your thoughts in the comments.

16 Sessions - Learn 16 AI projects from us.