Power BI doesn’t tell the whole story?

See the bigger picture with Hopara - seamlessly integrated right into your Power BI workflow. Get richer context, explore data interactively, and make faster decisions.

Watch our 3-minute demo to see Hopara in action and how easy it is to connect with your existing Power BI setup.

Ready to try it yourself?

Get started with a 15-day free trial and complimentary onboarding—no strings attached.

Hello!

Welcome to today's edition of Business Analytics Review!

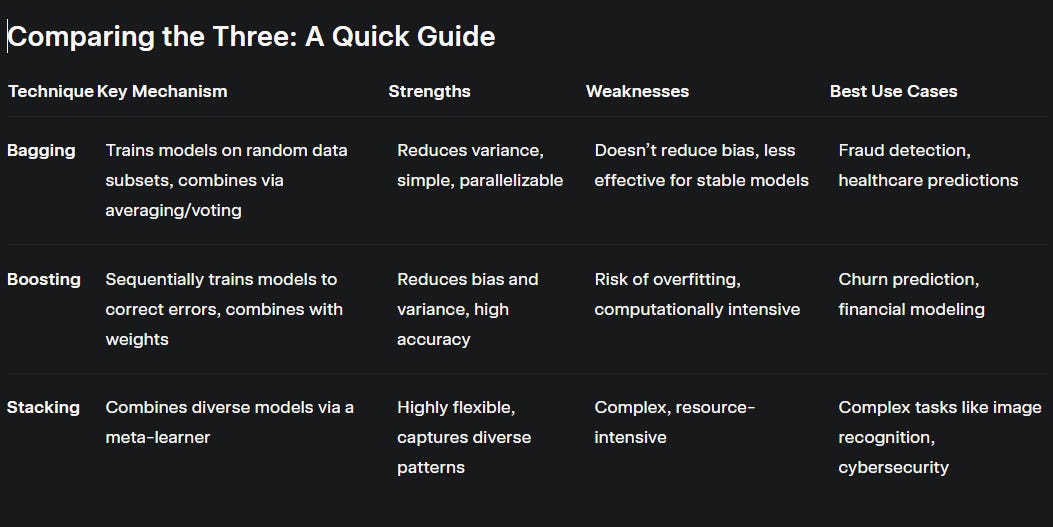

We unpack the fascinating world of Artificial Intelligence and Machine Learning. I’m thrilled to dive into a topic that’s at the heart of many cutting-edge AI applications: Ensemble Learning Techniques. Specifically, we’re exploring the technical intricacies of bagging, boosting, and stacking—three powerhouse methods that make machine learning models smarter, more robust, and ready to tackle real-world challenges. Whether you’re a data scientist or a business leader looking to leverage AI, this edition will break down these techniques in a way that’s both insightful and approachable. Let’s get started!

The Power of Ensemble Learning: Why Combine Models?

Imagine you’re trying to predict whether a customer will churn. A single model might miss subtle patterns, but what if you could combine the strengths of multiple models? That’s the magic of ensemble learning. By blending predictions from several models, ensemble methods often achieve higher accuracy and stability than any single model. Think of it as assembling a dream team: each member brings a unique perspective, and together, they solve problems more effectively. Today, we’ll focus on three key ensemble techniques—bagging, boosting, and stacking—and explore how they work, their strengths, and where they shine in the business world.

Bagging: Reducing Variance with Bootstrap Aggregating

How It Works

Bagging, short for Bootstrap Aggregating, is like hosting a brainstorming session where each participant gets a slightly different version of the problem. Here’s the process:

Create Subsets: Take your dataset and create multiple subsets by sampling with replacement (some data points may appear multiple times, others not at all).

Train Models: Train a model (often a decision tree) on each subset. These models are independent, so you can train them in parallel, speeding up the process.

Combine Predictions: For regression, average the predictions; for classification, take a majority vote.

This approach reduces variance, making it ideal for high-variance models like decision trees that tend to overfit. A classic example is the Random Forest algorithm, which builds multiple decision trees using bagging and adds feature randomness for even better performance.

Why It’s Useful

Bagging shines when dealing with noisy or imbalanced datasets. It’s simple to implement, computationally efficient (thanks to parallelization), and robust against overfitting. For instance, in healthcare, bagging has been used to predict patient outcomes by stabilizing predictions across noisy medical data, as highlighted in IBM’s guide on bagging. In finance, it powers fraud detection systems by improving model stability.

Master AI Agents & Build Fully Autonomous Web Interactions!

Join our AI Agents Certification Program and learn to develop AI agents that plan, reason, and automate tasks independently. Starts this weekend

Experienced Instructor from American Express

- A hands-on, 4-weeks intensive program with expert-led live sessions.

- Batch Size is 10, hence you get personalized mentorship.

- High Approval Ratings for the past cohorts

- Create Practical AI Agents after each session

- EMI options available

📅 Starts: 24st May | Early Bird: $1190

🔗 Enroll now & unlock exclusive bonuses! (Worth 500$+)

Real-World Example

Picture a bank using bagging to detect fraudulent transactions. By training multiple decision trees on different subsets of transaction data, the model can identify patterns of fraud without being swayed by outliers, like a single large transaction that’s legitimate. The result? A more reliable system that catches fraud while minimizing false alarms.

Limitations

Bagging is less effective for stable models like linear regression, where variance is already low. It also doesn’t reduce bias, so if your base model is inherently biased, bagging won’t fix that.

Boosting: Building Smarter Models Sequentially

How It Works

Boosting is like a relay race where each runner learns from the previous one’s mistakes. Here’s the breakdown:

Start with Equal Weights: Assign equal importance to all data points.

Train Sequentially: Train a model, then increase the weight of misclassified or poorly predicted instances so the next model focuses on them.

Combine Predictions: Combine the models’ predictions, often with weights based on their accuracy.

Popular boosting algorithms include AdaBoost, which adjusts weights to focus on hard-to-classify instances, and XGBoost, known for its speed and performance in data science competitions.

Why It’s Useful

Boosting reduces both bias and variance, making it ideal for improving weak models. It’s widely used in applications like customer churn prediction, where identifying rare events (like churn) is critical. For example, DataCamp’s tutorial shows how boosting enhances decision tree performance on telecom churn datasets.

Real-World Example

Consider an e-commerce company predicting which customers are likely to abandon their carts. Boosting can zero in on subtle patterns—like customers who browse late at night but don’t buy—by iteratively refining the model to focus on these edge cases. This precision helps businesses target interventions, like timely discounts, to retain customers.

Limitations

Boosting can overfit if not carefully tuned, especially with noisy data. It’s also computationally intensive since models are trained sequentially, unlike bagging’s parallel approach.

Stacking: The Art of Combining Diverse Models

How It Works

Stacking, or stacked generalization, is like a chef blending ingredients to create a perfect dish. Here’s how it works:

Train Base Models: Train multiple diverse models (e.g., decision trees, SVMs, neural networks) on the same dataset.

Generate Meta-Features: Use the predictions from these base models as input features for a meta-learner (often a simple model like logistic regression).

Final Prediction: The meta-learner combines the base models’ predictions to make the final output.

Stacking can involve multiple layers, where meta-learners feed into higher-level meta-learners, though this increases complexity.

Why It’s Useful

Stacking’s strength lies in its flexibility—you can combine any models to capture different patterns. It’s particularly effective in complex tasks like image recognition or network intrusion detection, where diverse models excel at different aspects of the problem. Towards Data Science notes that stacking’s multi-level approach can significantly boost performance.

Real-World Example

In cybersecurity, stacking can combine a decision tree’s ability to detect simple patterns with a neural network’s knack for spotting complex anomalies. The meta-learner then decides how much to trust each model, creating a robust system for detecting network intrusions.

Limitations

Stacking is computationally expensive and requires careful tuning to avoid overfitting. It also needs sufficient data, as splitting the dataset for training base models and the meta-learner can be data intensive.

Industry Insights: Where Ensemble Methods Shine

Ensemble techniques are transforming industries by delivering robust, accurate models. In finance, Random Forests (a bagging method) are used for credit risk evaluation, while XGBoost (a boosting algorithm) powers fraud detection systems. In healthcare, bagging aids in gene selection for disease prediction, as noted in Scaler’s article. Stacking is gaining traction in technology for tasks like network intrusion detection, where combining diverse models captures complex attack patterns. These methods are particularly valuable in high-stakes environments where accuracy and reliability are non-negotiable.

A Personal Anecdote

A few years ago, I worked with a retail client struggling to predict inventory demand. Single models kept overfitting to seasonal spikes, leading to costly overstocking. By implementing a Random Forest (bagging), we reduced prediction errors by 20%, saving thousands in inventory costs. Later, we experimented with XGBoost (boosting) to focus on rare demand surges, further improving accuracy. It was a reminder that the right ensemble method can turn a good model into a great one!

Recommended Reads

A Guide to Bagging in Machine Learning:

A practical guide to implementing bagging in Python, with examples using decision trees on a telecom churn dataset.Bagging vs Boosting in Machine Learning:

A clear comparison of bagging and boosting, detailing their mechanisms and real-world applications.Ensemble Methods: Bagging, Boosting, and Stacking

An in-depth exploration of all three techniques, with a focus on stacking’s multi-level approach.

Trending in AI and Data Science

AI Agents’ Final Hurdle

AI agents are advancing rapidly, but their biggest challenge remains: gaining secure, authorized access to apps and websites to perform complex, real-world tasks on behalf of users.Lawmakers Scrutinize Apple-Alibaba Deal

U.S. lawmakers express concerns over Apple’s potential partnership with Alibaba, citing data privacy, national security, and increased Chinese influence in American technology as key issues.Nvidia Eyes AI Expansion Beyond Hyperscalers

Nvidia is reportedly planning to broaden its AI business beyond major cloud providers, aiming to reach new markets and diversify its customer base amid growing global AI demand.

Trending AI Tool: H2O.ai

Looking to put ensemble learning into action? Check out H2O.ai, an open-source platform that makes building ensemble models a breeze. Its AutoML feature automates model selection and tuning, supporting Random Forests, Gradient Boosting Machines, and more. Whether you’re predicting customer behavior or optimizing supply chains, H2O.ai’s user-friendly interface and powerful algorithms can accelerate your AI journey. It’s a favorite among data scientists for its scalability and ease of use.

Learn more

Learn to create an advanced sales automation system for intelligent lead generation and lead scoring.

Helps to reduce the customer Acquisition cost by $50k per month.

Click Here Enroll Now for FREE