For any questions, mail us at vipul@businessanalyticsinstitute.com

Hello!

Welcome to today's edition of Business Analytics Review!

Today, we’re diving into the fascinating world of Deep Learning Architectures, focusing on three game-changers: ResNet, Transformer, and Generative Adversarial Networks (GANs). These architectures are the backbone of many cutting-edge AI applications, from recognizing images with pinpoint accuracy to generating human-like text or even creating stunning digital art. Let’s explore their design principles, see how they’re shaping industries, and share some resources to deepen your understanding.

ResNet: Mastering Deep Networks

Imagine you’re building a skyscraper with blocks, but the taller it gets, the harder it is to keep stable. In deep learning, stacking more layers in a neural network can lead to a similar problem: the vanishing gradient issue, where the network struggles to learn as signals weaken. Enter ResNet, or Residual Network, introduced in 2015 by Kaiming He and colleagues in their seminal paper, “Deep Residual Learning for Image Recognition” (Deep Residual Learning). ResNet’s genius lies in its use of skip connections, which allow the network to bypass certain layers, effectively letting it learn the difference (or residual) between the input and output of a layer. This makes it easier to train very deep networks—think hundreds or even thousands of layers—without losing performance.

For example, ResNet-50, a 50-layer network, has been a go-to for image classification tasks, powering applications like facial recognition and medical imaging. Its ability to maintain accuracy even at extreme depths has made it a staple in computer vision, with variants like ResNeXt and DenseNet building on its foundation for even better performance.

Transformer: The Power of Attention

If ResNet reshaped computer vision, Transformers have redefined natural language processing (NLP) and beyond. Introduced in 2017 in the groundbreaking paper “Attention Is All You Need” (Attention Paper), Transformers rely on a mechanism called self-attention to process entire sequences of data simultaneously, unlike older recurrent neural networks (RNNs) that worked sequentially. This parallel processing makes Transformers faster and more efficient, especially for tasks like machine translation or text generation.

The core idea is that self-attention allows the model to weigh the importance of different words in a sentence, capturing long-range dependencies. For instance, in the sentence “The cat, which was hiding, jumped,” the Transformer can link “cat” and “jumped” despite the distance between them. This has made Transformers the foundation for models like BERT and GPT, which excel in tasks from chatbots to automated content creation. Beyond NLP, Transformers are now used in vision (Vision Transformers) and even reinforcement learning, showcasing their versatility.

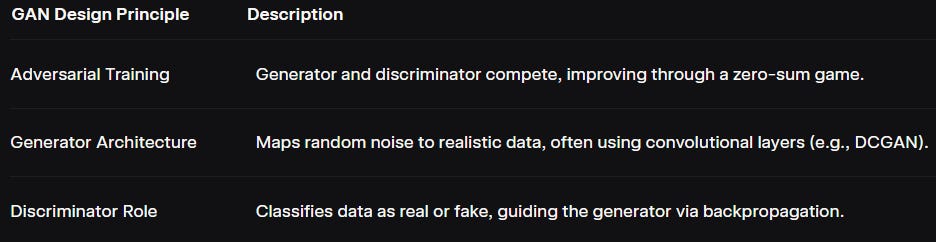

GANs: Creativity Through Competition

Generative Adversarial Networks (GANs), introduced by Ian Goodfellow in 2014 (GAN Paper), are like a creative showdown between two neural networks: the generator and the discriminator. The generator crafts synthetic data (like images or audio) from random noise, while the discriminator tries to tell if it’s real or fake. Through this adversarial process, the generator gets better at producing realistic outputs, often indistinguishable from real data.

GANs have sparked a revolution in generative AI, enabling applications like creating photorealistic faces, transforming photos from day to night, or even generating music. For example, NVIDIA’s StyleGAN has produced stunningly realistic images, while CycleGAN enables style transfers without paired datasets. However, GANs can be tricky to train, with challenges like mode collapse (where the generator produces limited outputs). Variants like DCGAN and Conditional GANs address these issues, expanding their practical use

Industry Insights and Examples

These architectures aren’t just theoretical—they’re driving real-world impact. ResNet powers medical imaging systems that detect diseases with unprecedented accuracy, like identifying tumors in X-rays. Transformers are behind tools like ChatGPT, which businesses use for customer service automation, and AlphaFold2, which solved protein folding challenges (AlphaFold2). GANs are fueling creative industries, with tools like Artbreeder letting artists blend images to create new designs, or in healthcare, generating synthetic data to train models when real data is scarce.

However, each comes with trade-offs. ResNet’s depth can increase computational costs, Transformers require significant memory for long sequences, and GANs can be unstable during training. Ongoing research in 2025 is addressing these, with innovations like sparse Transformers and improved GAN loss functions.

Recommended Reads

Introduction to Resnet or Residual Network

A clear, beginner-friendly guide to ResNet’s architecture and its impact on deep learning.The Illustrated Transformer

A visually rich explanation of Transformers, breaking down self-attention and encoder-decoder structures.A Gentle Introduction to Generative Adversarial Networks (GANs)

A comprehensive overview of GANs, their mechanics, and creative applications.

Trending in AI and Data Science

Let’s catch up on some of the latest happenings in the world of AI and Data Science

Microsoft Launches Agent Store Marketplace for AI Models and Tools

Microsoft introduces Agent Store, a new marketplace for AI models and tools, aiming to streamline access and integration for developers and businesses seeking advanced AI solutions.Samsung Nears Deal with Perplexity for AI Features

Samsung is close to a major agreement with Perplexity to enhance its devices and services with new AI-driven features, broadening its tech ecosystem and user experience.Meta and Anduril Partner on AI-Powered Military Products

Meta and defense firm Anduril collaborate to develop military products powered by artificial intelligence, signaling a strategic move into advanced defense technology solutions.

Trending AI Tool: Weights & Biases

For those looking to experiment with these architectures, Weights & Biases (W&B) is a trending tool in 2025. It’s a developer-first MLOps platform that streamlines experiment tracking, model visualization, and team collaboration. Used by over 700,000 ML practitioners, including teams at OpenAI and Microsoft, W&B helps you manage complex deep learning projects, from tweaking ResNet hyperparameters to fine-tuning Transformers. Its recent acquisition by CoreWeave in March 2025 signals its growing importance (CoreWeave Acquisition). Explore it at Weights & Biases.

Learn more

Learners who enroll TODAY, would get an Two e-books FREE

“Data Cleaning using Python“ (469 USD)

“Data Manipulation using Python“(510 USD)

For any questions, mail us at vipul@businessanalyticsinstitute.com