Hi !

Welcome to the new edition of Business Analytics Review !

Today we explore the area of Data Leakage in Machine Learning. As machine learning continues to reshape industries, understanding the nuances of data handling is essential for developing robust models. In this issue, we will explore what data leakage is, its implications, and effective strategies to prevent it.

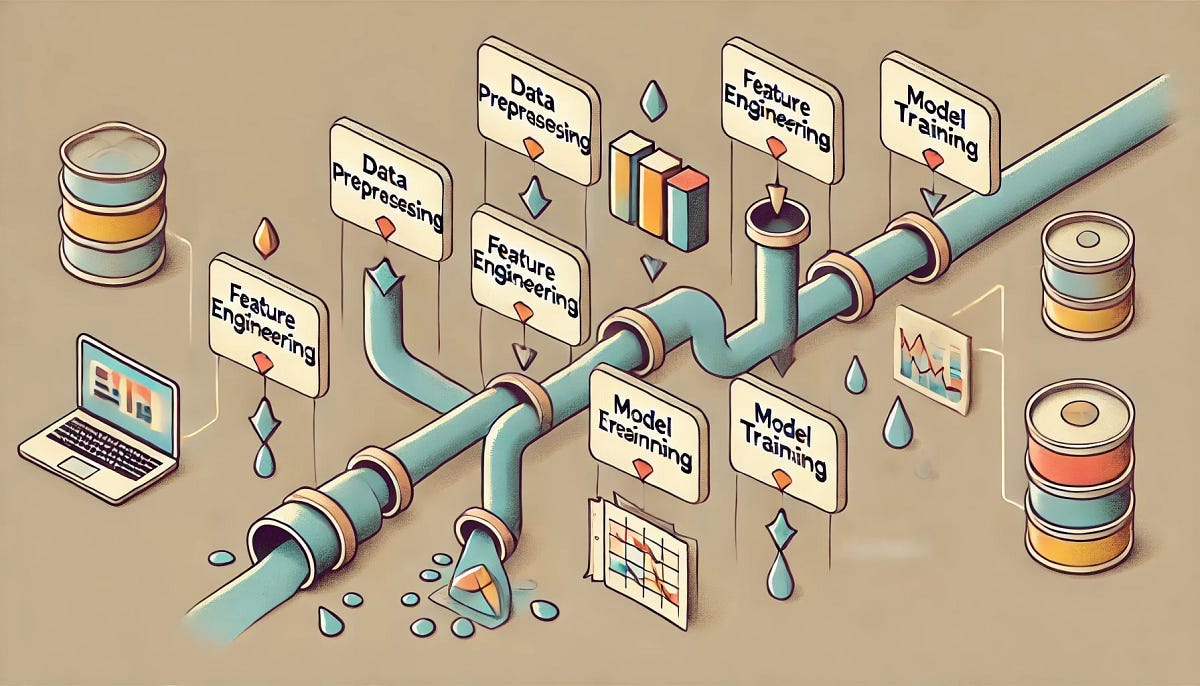

Understanding Data Leakage

Data leakage occurs when a machine learning model inadvertently uses information during training that would not be available at the time of prediction. This can lead to overly optimistic performance metrics during model evaluation but results in poor real-world performance when the model is deployed. Essentially, the model learns from "leaked" data that it should not have access to, creating a false sense of accuracy.

Common Causes of Data Leakage

Inclusion of Future Information: Using data that will only be available after the prediction point can skew results.

Improper Feature Selection: Selecting features that are correlated with the target variable but not causally related can mislead the model.

External Data Contamination: Merging external datasets without proper validation can introduce biases.

Data Preprocessing Errors: Scaling or normalizing data before splitting it into training and test sets can lead to leakage.

Incorrect Cross-Validation: Including future data points in cross-validation processes can provide misleading evaluations.

For instance, imagine training a model to predict customer churn but inadvertently including information about whether customers have already canceled their subscriptions in the training set. This would lead to a model that performs well during testing but fails when applied to new data.

Strategies to Prevent Data Leakage

Careful Data Splitting: Always split your dataset into training and testing sets before any preprocessing steps.

Feature Engineering Post-Split: Create new features only after dividing your dataset to ensure no test data influences training.

Use Time-Based Splits: For time-series data, ensure that training sets only include past information relative to the test set.

Regular Audits of Data Pipelines: Continuously monitor and audit your data processing steps to identify potential leakage points.

Recommended Reads on Data Leakage

What is Data Leakage in Machine Learning? - A comprehensive overview by IBM that explores causes and effects of data leakage. Read more here

Preventing Data Leakage in Machine Learning Models - An insightful article from Shelf.io detailing practical prevention methods for data leakage. Read more here

Data Leakage in Machine Learning: Detect and Minimize Risk - Built In offers an expert perspective on identifying and mitigating risks associated with data leakage. Read more here

Trending News in the field of Business Analytics

Schneider Electric, Nvidia Partner on AI Data Center Design New data center reference design supports liquid cooling optimized for Nvidia’s AI processors. Read More

AI-Powered Holograms Converse at AWS re:Invent 2024 Holograms engage in autonomous dialogue that responds to human input in real-time. Read More

Tool of the Day - AI Fairness 360

AI Fairness 360 is an open-source toolkit designed to identify and mitigate bias in machine learning models. It provides a comprehensive set of tools for:

Measuring Bias: It offers a variety of metrics to quantify bias in datasets and models.

Understanding Bias: It provides tools to explain the reasons behind bias.

Mitigating Bias: It includes techniques to reduce bias in models, such as reweighting data, adversarial debiasing, and fair representation learning.

By using AI Fairness 360, developers and researchers can create more equitable and unbiased AI systems.

We hope you found this edition insightful. If you enjoyed the content, please give us a thumbs up!

Please don’t hesitate to share your thoughts in the comments below. We look forward to hearing from you!

If you wish to promote your product / services , please visit here