A/B Testing Framework

Edition #249 | 04 February 2026

The Modern Adversary’s Grip on M365: From Identity Incident to Crisis

What starts as a suspicious login can turn into a ransomware event faster than most teams expect.

Join us on February 18th for an interactive tabletop simulation to show you exactly how this plays out.

Hello!

Welcome to today’s edition of Business Analytics Review!

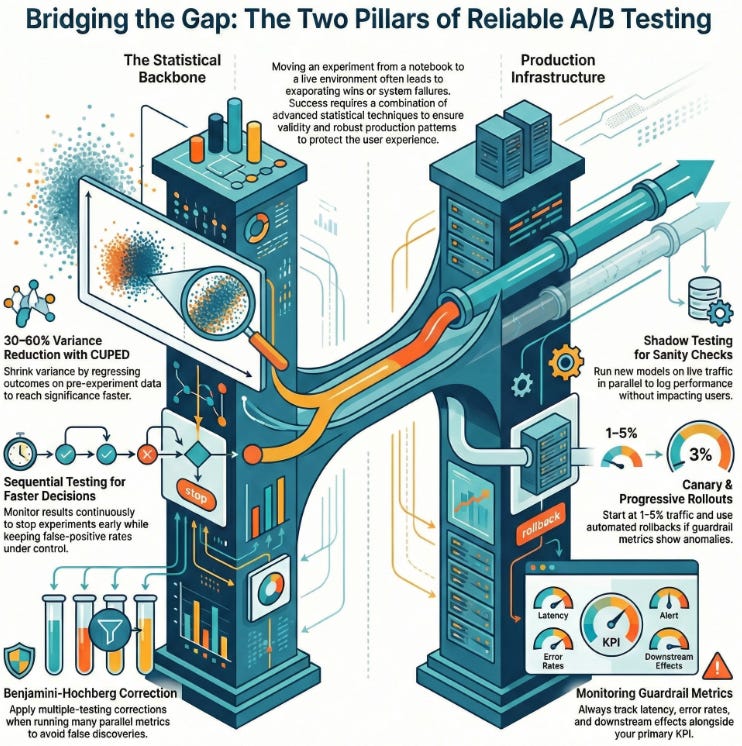

Today’s edition dives deeper into A/B Testing Frameworks, zeroing in on the statistical methods that give us genuine confidence in our results and the production implementation details that turn promising offline experiments into reliable, scalable systems that actually move the needle on revenue, retention, or efficiency.

If you’ve ever celebrated a “statistically significant” win in a notebook only to watch it evaporate in production (or worse, quietly harm a key guardrail metric), you know the stakes. I’ve lived through that exact scenario more than once a new recommendation engine looked fantastic on historical holdout data, but once live it quietly increased latency and drove mobile users away. A robust A/B framework isn’t just nice-to-have; it’s the difference between shipping breakthroughs and shipping regret.

The Statistical Backbone

Everything starts with controlled randomization and rigorous hypothesis testing. We define a crisp null hypothesis (“no meaningful difference between variants”), decide on the minimum detectable effect (MDE) that actually matters to the business, run a power analysis to size the experiment, and then let the data speak through p-values, confidence intervals, and effect sizes.

A few modern techniques that have become table stakes in 2026:

CUPED (Controlled-experiment Using Pre-Experiment Data): By regressing the outcome on pre-experiment covariates (e.g., a user’s historical conversion rate), we can shrink variance by 30–60% in many real-world cases. I’ve seen experiments that would have needed 4–6 weeks reach significance in 2–3 weeks with CUPED applied properly.

Sequential testing: Instead of committing to a fixed sample size, you can monitor results continuously and stop early (or continue) while keeping false-positive rates under control. Methods like always-valid p-values or group-sequential designs are lifesavers when traffic is unpredictable.

Multiple-testing correction: When you’re running 15–20 metrics or dozens of experiments in parallel, naive p < 0.05 will flood you with false discoveries. Benjamini-Hochberg FDR is usually the sweet spot between conservatism and practicality.

Don’t forget the classics that still bite teams: peeking without correction, ignoring pre-commitment to your analysis plan, or treating ratio metrics (revenue per user, CTR) without the delta method or CUPED-style adjustments. Getting the statistics right upfront saves weeks of wasted iteration later.

Production Implementation That Actually Works

The notebook-to-production gap is where most experiments die. The infrastructure you build here determines whether your statistical rigor actually translates to trustworthy business decisions.

Key patterns that separate mature teams from the rest:

Traffic splitting & routing: Feature flags (LaunchDarkly, Unleash) for simple cases; model routers or graph-based serving (Seldon Core, KServe, TorchServe with traffic splits, SageMaker multi-variant endpoints) for ML models. Hash-based user assignment ensures consistency.

Shadow testing: The new model runs on live traffic in parallel but its predictions are logged only perfect for sanity-checking latency, cost, drift, and offline agreement with the champion before any user sees a difference.

Canary & progressive rollouts: Start at 1–5% traffic, monitor guardrails, then ramp automatically. Automated rollback on anomaly detection (spike in error rate, latency, or a secondary KPI) has saved more than one launch I’ve been part of.

Interleaved experiments: Especially powerful for ranking/retrieval models show results from both models in the same session and measure preference directly.

Guardrail metrics & monitoring: Never optimize only the primary KPI. Always watch latency, error rates, fairness/bias slices, downstream effects (support tickets, churn), and system health. Set up real-time dashboards and alerts so bad experiments can’t linger.

One quick war story: We once shadowed a new personalization model that looked 12% better on offline metrics. In shadow we discovered it increased 95th-percentile latency by 800 ms on mobile enough to tank conversion for that segment. We fixed the inference path and re-tested; the final lift was still +9% with no latency regression. Shadow + guardrails turned a potential disaster into a clean win. Check it out

Recommended Reads

Building Production A/B Testing Infrastructure for ML Models

A hands-on, code-level guide to deploying safe, observable model A/B tests at scale using Seldon Core v2 exactly the infrastructure pattern you’ll reach for when your next big model is ready for traffic. Check it outA/B Testing for Machine Learning Models: How to Compare Models with Confidence

Straightforward breakdown of traffic splitting, metric selection, statistical testing, and interpretation when the variants are entire ML models rather than simple UI changes with clear cloud-native examples. Check it outAI Production Experiments: The Art of A/B Testing and Shadow Deployments

Excellent primer on choosing between full A/B, shadow, and interleaved testing, defining success criteria, and avoiding common statistical and operational pitfalls in live environments. Check it out

Trending in AI and Data Science

Let’s catch up on some of the latest happenings in the world of AI and Data Science

China conditionally approves DeepSeek buy Nvidia’s H200 chips - sources

China has conditionally approved DeepSeek’s purchase of Nvidia’s H200 AI chips, amid US export curbs. Sources say the approval allows limited imports for research, balancing tech needs with restrictions. This eases tensions in AI hardware access.Perplexity signs $750 million AI cloud deal with Microsoft, Bloomberg News reports

Perplexity AI inked a $750 million cloud deal with Microsoft for compute resources. The pact boosts Perplexity’s infrastructure for advanced AI models. It highlights growing partnerships in AI scaling.Amazon talks to invest up to $50 billion in OpenAI,

Amazon is negotiating a massive up to $50 billion investment in OpenAI. Discussions aim to deepen ties in AI development and cloud services. The deal could reshape AI investment landscapes.

Trending AI Tool: Evolv AI

Still one of the most exciting platforms in 2026. It goes far beyond classic A/B testing: it uses active learning and behavioral signals to ideate, prototype, and continuously optimize digital experiences in real time. Teams routinely see double-digit lifts in conversion, revenue, or engagement with dramatically less manual setup and analysis. If the traditional “design → launch → wait weeks → analyze” cycle feels outdated to you, this is the future you can start using today.

Learn more.

Follow Us:

LinkedIn | X (formerly Twitter) | Facebook | Instagram

Please like this edition and put up your thoughts in the comments.